I got a new Amazon Alexa a few weeks ago. [Full disclosure: I work for a company that’s owned by Amazon Web Services.] I find the technology a fun novelty, but I’m not sure it’s mature enough to be promoted to a central part of my home life beyond a music player and todo-list maker. That being said, it really is fun.

Right now, there are two major players in the voice control market: Siri and Alexa. I find that Siri works for me about half the time. I either don’t leave enough time between “Hey, Siri” and what I want to say and/or it goes off and thinks and never delivers results. When it does deliver results, they’re accurate and useful, even for nebulous requests. The natural language parsing is spectacular and the results are always in line with my intent. Alexa, on the other hand, has no problems recognizing me. I can say “Alexa” and continue directly with what I was going to say — no awkward pause wondering if the trigger-word worked. The problem with Alexa is the limited vocabulary and the difficulty in discovering or remembering what you can say. There are a lot of built-in first-party commands. They include things like “play artist/album/station,” “add xyz to my todo/shopping list,” “set a timer for 10 minutes,” and “turn on/off [name of connected-home device].” If you want to go off-menu, you can install a 3rd party skill. Skills are usually of the form “ask [skill name] [something].” For instance, the built-in connected home commands let me turn a light bulb on or off. If I want to change the color, I have to install the vendor’s skill, then figure out the right syntax and grammar for “Alexa, ask LIFX to set the color of the living room light to blue.” That command is a mouth full. It would be much easier to say “Alexa, set the living room lights to blue,” but there isn’t native support for that level of control.

Both Siri and Alexa have their plusses and minuses, but I’m leaning more toward Alexa’s flawless recognition, despite its opaque set of commands. I end up memorizing or writing down the few I use.

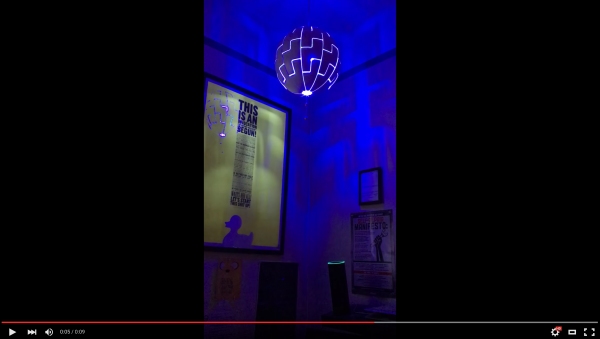

Picked up a pair of LIFX bulbs, which can be voice-controlled from the Echo. I don’t know how they compare to the Philips bulbs on features, but I do know they’re cheaper — you are not required to have a $50 basestation. Between the simple control through Alexa and the detailed control through the smartphone app, they work very well. In fact, the bulbs remember their color, so if you customize it through the app, the built in voice command to turn it on will retain the color. It’s fun to have color in my weird IKEA Death Star lamp:

While the bulbs are fun as a novelty, I’m not sure I could outfit the house with them. First, that would be expensive. Second, while you can manually control them, I’d want something with better manual controls. Obviously, turning off the lamp or switch turns off the bulb. Also obviously, there’s no way to voice-control it back on again because the power to the bulb is cut. Manually turning on the fixture causes the bulb to also turn itself on automatically. I expect one of the WeMo light switches would be better for on/off control (though no color control), but I have antique-style pushbutton switches in the house. None of the home automation switches match this style.

Implementing an Alexa Skill

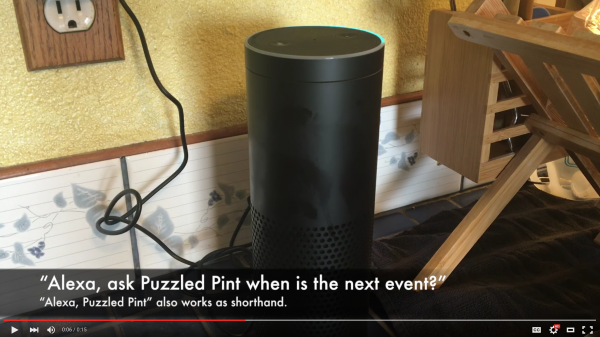

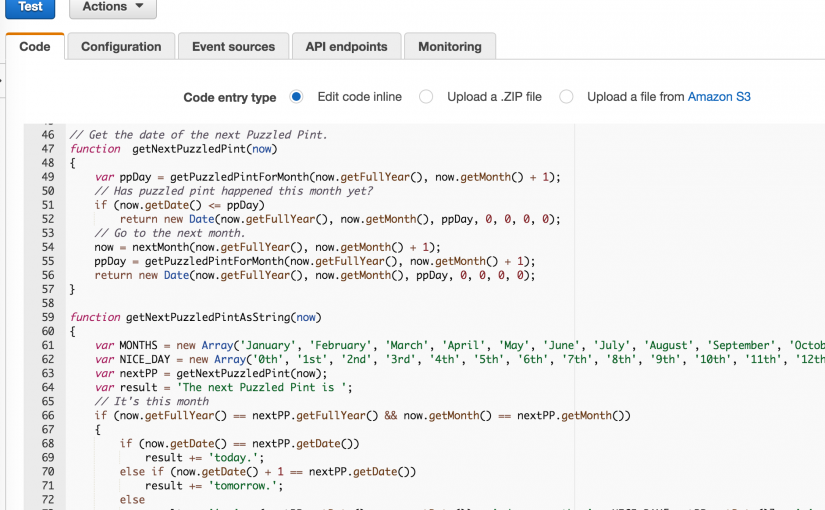

To learn what sorts of things you can and cannot do with the Echo, I wrote a small skill for it. The code is Open Source and on GitHub, if you want to follow along with the source code at home. At the time of writing this blog post, it hasn’t yet been approved for release, but you should be able to install it soon. You should be able to find the skill by searching for Puzzled Pint within the Alexa webapp or smartphone app. When you ask, it tells you how many days away the next puzzled pint is.

Alexa skills have three components:

- A list of intents, which could contain parameters.

- A list of words and phrases. These map on to intents.

- Back-end Node.js or Python code that receives intents, performs work, and issues a response.

Intents are like function definitions. They have a name and optional parameters. For example, you can create one that lets you set your favorite color to a parameterized value and another that queries the current value. The phrases map to intents and is a list of every way you think your users will say something to Alexa. Examples might be “my favorite color is [color]” and “set my favorite color to [color]” and “what’s my favorite color?” The parameter values also must be spelled out. So you’d have to give it a list of colors. If you leave purple off the list, then nobody can set their favorite color to purple. The back-end code runs in an AWS Lambda instance. If you think of the intents as function definitions, then the Lambda code is the implementation of those functions. The back-end code also performs session and user handling. If you run a 3rd party service, you can map an Echo session to a user in your own system. That’s how you can use skills to order an Uber or have a pizza delivered.

Because of the grammar limits of launching skills, I am unable to write one that maps to the phrase “Alexa, when is the next Puzzled Pint?” I’m instead stuck with long-winded and non-intuitive sentences like “Alexa, ask Puzzled Pint when is the next event?” Fortunately, you can also use empty phrases so “Alexa, ask Puzzled Pint” works just as well for a default response.

Another huge missing feature is the inability to mark a spoken field as a wildcard/dictation/anything-goes. For instance, you can say “Alexa, add ‘go to the bank’ to my todo list” and it magically works in the native todo-list skill. As I mentioned above, with a 3rd party Alexa skill, you can define the phrase “my favorite color is [parameter]” but you have to list out every possible matching color that can be spoken. No periwinkle? Oh, well.

Yet another problem is with analysis. You can get logging once the spoken word parser has matched a phrase and calls your Lambda function. That parser acts as a gate to calls to your function and doesn’t ever log. If someone is using phrasing or color names that you didn’t think of, you have no way of knowing. Ideally, you could get a transcript of the various things said to your skill so that you can fine-tune it to user behavior.

While the flexibility and infrastructure for implementing skills is nice, the artificial siloing of each skill into “Alexa, ask xyz to…” causes them to be less than natural language for anything more complex than “Alexa, open Cat Facts.”

On mine if I simply say “Alexa, Cat facts” it calls the app as expected.

Yep. That’s another shortcut for launching a skill without parameters. If a skill requires parameters, you need the verb in there (“ask” or “tell,” for example).